Multimodal AI Market Outlook:

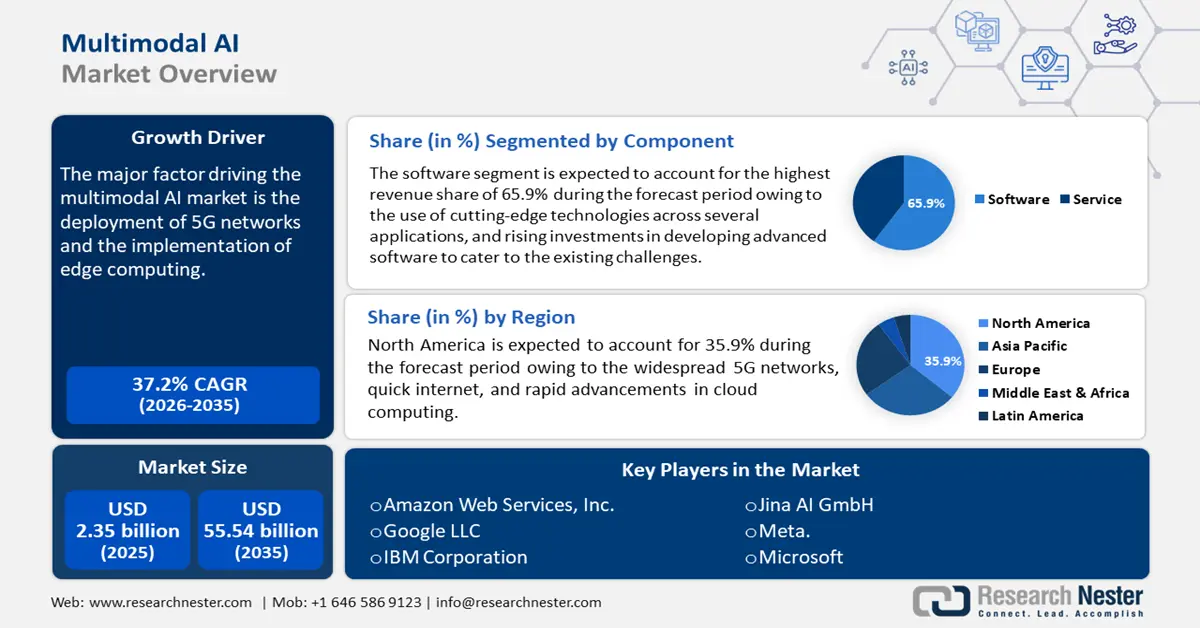

Multimodal AI Market size was over USD 2.35 billion in 2025 and is poised to exceed USD 55.54 billion by 2035, growing at over 37.2% CAGR during the forecast period i.e., between 2026-2035. In the year 2026, the industry size of multimodal AI is estimated at USD 3.14 billion.

The major factor driving the multimodal AI market is the deployment of 5G networks and the implementation of edge computing across several sectors. Edge computing reduces latency and bandwidth consumption for real-time multimodal AI applications by processing data closer to the source. This is particularly useful for Internet of Things (IoT) devices and smart systems, as they require quick data processing to function properly. The introduction of 5G has enhanced network capabilities, providing the dependability and speed needed to handle large volumes of multimodal data. For instance, Datasea, Inc.’s Chinese subsidiaries, Shuhai Information Technology Co., Ltd and Guozhong Times Technology Co., Ltd. signed a bond with Qingdao Ruizhi Yixing Information Technology Co., Ltd. to supply Qingdao with a new of range of advanced 5G-AI multimodal services.

The rise of multimodal AI can be attributed to the advancements in human-machine interface, which give consumers more intuitive and natural ways to engage with technology. Speech, writing, gestures, and visual signals are just a few of the inputs that multimodal AI combines to improve understanding and response to human commands. Experiences have become smoother and more immersive across various applications due to this advancement. In March 2024, Apple announced the launched its first customized multimodal AI model, MM1, capable of revolutionizing Siri and iMessage by analyzing texts and images contextually. The in-context learning enables the model to generate descriptions of images and answers about the content of photo-based prompts based on content it hasn’t seen before.

Key Multimodal AI Market Insights Summary:

Regional Highlights:

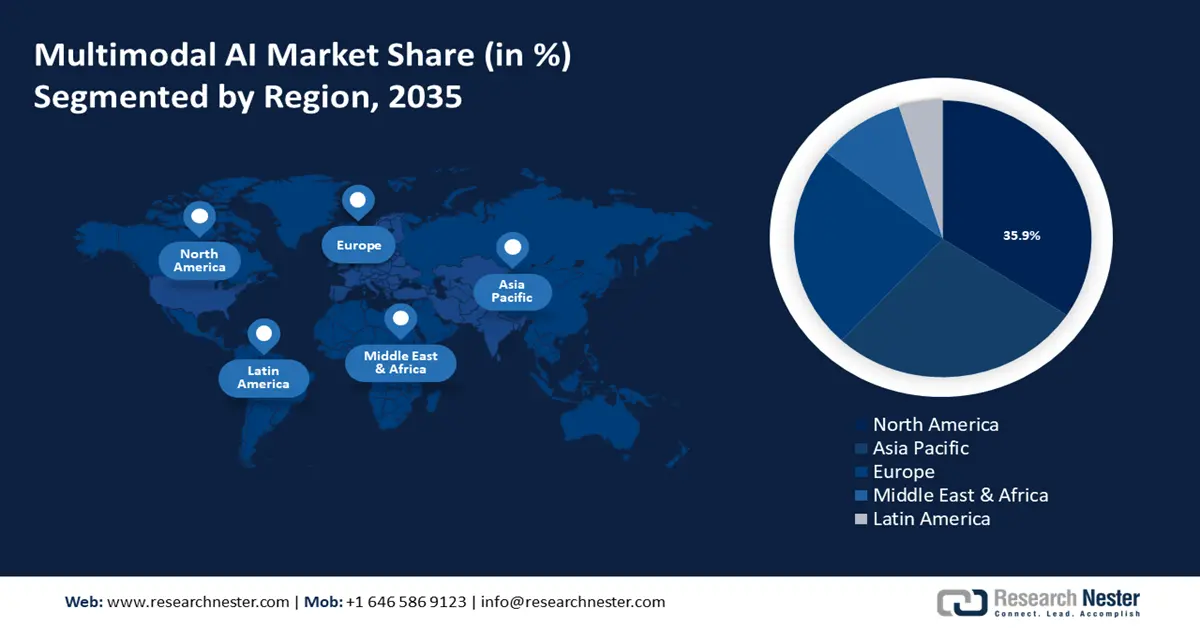

- North America multimodal AI market will account for 35.90% share by 2035, driven by sophisticated technological infrastructure, widespread 5G networks, quick internet, and cloud computing resources that enable real-time data processing.

- Asia Pacific market will register stable CAGR during 2026-2035, driven by the quick adoption of cutting-edge technologies in various sectors, including e-commerce, healthcare, and finance, in Asia Pacific countries.

Segment Insights:

- The software segment in the multimodal ai market is projected to hold a 65.90% share by 2035, attributed to advanced AI technologies managing multiple data types effectively.

Key Growth Trends:

- Growing need for solutions tailored to individual industries

- Rising need in the automotive industry

Major Challenges:

- Bias potential in multimodal models

- Restrictions on transferability

Key Players: Aimesoft, Amazon Web Services, Inc., Google LLC, IBM Corporation, Jina AI GmbH, Meta., Microsoft, OpenAI, L.L.C., and Twelve Labs Inc.

Global Multimodal AI Market Forecast and Regional Outlook:

Market Size & Growth Projections:

- 2025 Market Size: USD 2.35 billion

- 2026 Market Size: USD 3.14 billion

- Projected Market Size: USD 55.54 billion by 2035

- Growth Forecasts: 37.2% CAGR (2026-2035)

Key Regional Dynamics:

- Largest Region: North America (35.9% Share by 2035)

- Fastest Growing Region: Asia Pacific

- Dominating Countries: United States, China, Japan, Germany, United Kingdom

- Emerging Countries: China, India, Japan, South Korea, Singapore

Last updated on : 18 September, 2025

Multimodal AI Market Growth Drivers and Challenges:

Growth Drivers

- Growing need for solutions tailored to individual industries: As AI technologies are evolving, the demand for customized software and solutions is increasing to meet specific industrial goals and challenges. Multimodal AI, for example, has the potential to revolutionize patient care and medical research by analyzing medical pictures, textual patient records, and even audio recordings of doctor-patient conversations to provide full diagnostic insights. For instance, in August 2024, Fractal announced the launch of vaidya.ai, a multimodal healthcare platform designed to provide free and easy assistance to patients.

- Rising need in the automotive industry: Multimodal AI is being used in the automobile industry to develop advanced driver assistance systems (ADAS) that combine textual data from sensors, audio data from in-car voice assistants, and visual data from cameras to improve road safety and the driving experience. This sector-specific strategy is opening the door to a new wave of innovation where customized multimodal AI solutions are used to address the particular opportunities and difficulties faced by each business.

Several automotive companies are using multimodal AI to streamline their processes and tasks. For instance, BMW Group recently launched a transformative initiative, using GenAI to streamline procurement tasks and improve supplier interaction. The company plans to partner with AWS, BCG Platinion, and BCG X to ensure scalable and reliable integration of GenAI.

- Using generative AI approaches to expedite the construction of multimodal ecosystems

When it comes to AI, generative AI is comparable to the creative powerhouse of the field, able to generate text, images, and even full videos. It can produce information that blends several data forms. It may, for example, synthesize realistic images from textual descriptions, write thorough explanations for photos, or even produce movies with a sophisticated comprehension of the subject matter. The intersection of multimodal AI and generative AI occurs in this merging of data forms.

In content creation, for instance, a multimodal AI system powered by generative AI may automatically create marketing materials that integrate text, graphics, and videos to provide a more engaging and customized user experience. It may create engaging and comprehension-boosting interactive instructional content that adjusts to each learner's unique learning style. Additionally, it can automate the production of multimedia presentations, enhancing their impact and educational value.

Challenges

- Bias potential in multimodal models: Similar to their unimodal counterparts, multimodal AI models are susceptible to bias and this stems from the training set of data. Training datasets, which include text, photos, videos, and other media, could unintentionally highlight prejudices from society or culture that are present in the data sources. These biases can take many different forms. For example, in image recognition, they may be racial or gender-based or linguistic and contextual in tasks involving natural language processing. These biases are necessarily inherited and perpetuated by multimodal AI models when they are trained on such data, which might result in unfair or erroneous outcomes when making predictions or choices.

- Restrictions on transferability: Limited transferability draws attention to a key limitation in these AI systems' flexibility and adaptability. Multimodal AI models trained on one type of data may not adapt or perform well when confronted with a new type of data, just as a conductor trained in classical music may face difficulties while arranging a jazz band. This transferability constraint emphasizes the need for caution, particularly when using these models in dynamic and varied real-world contexts.

The difficulty stems from the fact that the information learned during training is intrinsically linked to the particular modalities, patterns, and features of that training dataset. Upon encountering novel or distinct data kinds including, shifting from written to visual data or organizing data to unorganized data, these models frequently encounter difficulties in producing precise forecasts or deriving significant understandings.

Multimodal AI Market Size and Forecast:

| Report Attribute | Details |

|---|---|

|

Base Year |

2025 |

|

Forecast Period |

2026-2035 |

|

CAGR |

37.2% |

|

Base Year Market Size (2025) |

USD 2.35 billion |

|

Forecast Year Market Size (2035) |

USD 55.54 billion |

|

Regional Scope |

|

Multimodal AI Market Segmentation:

Component

The software segment is set to hold over 65.9% multimodal AI market share by the end of 2035. Multimodal artificial intelligence software consists of integrated systems designed to manage and process multiple data kinds at once, including text, audio, video, and images. To enable a thorough interpretation of multimodal information, these software solutions frequently use cutting-edge technologies like machine learning (ML), deep learning (DL), and natural language processing (NLP). Multimodal AI software enables users to design, develop, and supervise AI models that can effectively handle a variety of data modalities. In July 2024, Meta launched a novel software, an AI text-to-3D generator that can generate or retexture 3D objects in under 1 minute.

Data Modality

The speech & voice data segment is projected to witness significant growth in multimodal AI market during the forecast period. The importance of speech and voice data has increased due to the widespread adoption of voice-enabled devices, virtual assistants, and voice-activated apps across multiple industries. Developments in speech recognition technology, enhanced language processing algorithms, and the growing acceptance of voice-activated instructions in smart devices are other factors boosting segment growth. Speech and voice data are seamlessly integrated into multimodal AI applications, further solidifying its position as a major multimodal AI market driver.

For instance, in November 2023, Microsoft announced the launch of Azure AI Speech, a step forward in personal voice customization. This feature is designed to help companies such as Swisscom, Progressive, Vodafone, and Duolingo build apps that allow users to create their own AI voice.

Our in-depth analysis of the multimodal AI market includes the following segments

|

Component |

|

|

Data Modality |

|

|

End use |

|

|

Enterprise Size |

|

Vishnu Nair

Head - Global Business DevelopmentCustomize this report to your requirements — connect with our consultant for personalized insights and options.

Multimodal AI Market Regional Analysis:

North America Market Insights

North America industry is likely to dominate majority revenue share of 35.9% by 2035. The sophisticated technological infrastructure in North America makes it easier to use multimodal AI systems. Widespread 5G networks, quick internet, and a wealth of cloud computing resources enable the infrastructure needed to implement and expand multimodal AI systems. This infrastructure enables real-time data processing and integration from several sources, which is necessary for multimodal AI applications. For instance, according to Research Nester analysts, North America will have close to 406 million 5G subscriptions by 2028.

The U.S. stands out for its significant investments in AI research and development made by both the government and the private sector. Notable IT giants including, Google, Microsoft, Amazon, and IBM have regional headquarters. Additionally, they invest a lot of money in the creation of innovative AI technologies, such as multimodal AI.

In Canada, the multimodal AI market is seeing a surge in new companies, intensifying the dynamic and competitive atmosphere. Government grants and initiatives that promote collaborations between commercial and university researchers also boost multimodal AI market growth.

Asia Pacific Market Insights

Asia Pacific in multimodal AI market is expected to experience a stable CAGR during the forecast period due to the several sectors' quick adoption and integration of cutting-edge technologies is one important contributing factor. The economies of the Asia Pacific, including China, Japan, South Korea, and India, have grown significantly, which has raised investment in AI. The demand for multimodal AI applications in industries such as e-commerce, healthcare, and finance has been fueled by the region's sizable and diversified consumer base as well as the widespread use of smartphones and other smart devices.

In South Korea, the government is actively promoting AI research and development through various financing and programmatic efforts, the position of the country as a global leader in AI technology. Multimodal AI, which combines data from wearables, imaging, and medical records to provide comprehensive patient care, is being used in South Korea to enhance personalized health care and telemedicine services.

Due to significant investments, an abundance of data, and a dedicated government push for AI leadership, China multimodal AI market is growing swiftly. Chinese tech giants, including Baidu, Alibaba, and Tencent, are making significant investments in multimodal AI research and applications, ranging from autonomous driving to smart city services. Multimodal AI is also being used by healthcare organizations to improve patient outcomes and diagnostic accuracy.

AI is being used to analyze patient monitoring devices, medical records, and imaging data. The Chinese government wants to make the country a leader in AI by 2030 with significant investments in talent development, research, and infrastructure. China's vast data resources give them a competitive advantage in the training of sophisticated AI models.

Multimodal AI Market Players:

- Reka AI, Inc.,

- Company Overview

- Business Strategy

- Key Product Offerings

- Financial Performance

- Key Performance Indicators

- Risk Analysis

- Recent Development

- Regional Presence

- SWOT Analysis

- Aimesoft

- Amazon Web Services, Inc.

- Google LLC

- IBM Corporation

- Jina AI GmbH

- Meta.

- Microsoft

- OpenAI, L.L.C.

- Twelve Labs Inc.

The global multimodal AI market is highly competitive consisting of several IT giants and local software and hardware manufacturers. Along with these, many research organizations are at the forefront of this competitive landscape, each contributing unique innovations and technologies.

Together, these businesses control the lion's share of the multimodal AI market and set the direction of industry trends. They are also seen to adopt several strategic moves such as mergers and acquisitions, partnerships, product launches, or joint ventures to enhance their product base and sustain the competition. To map the supply network, these multimodal AI businesses' financials, strategy maps, and products are examined. Here are some leading players in the multimodal AI market:

Recent Developments

- In October 2023, Reka AI, Inc., launched Yasa-1, a ground-breaking multimodal AI assistant intended to expand its comprehension beyond text to encompass images, brief movies, and audio clips. Yasa-1 gives businesses the adaptability to customize their features to private datasets with different modalities, allowing for the development of creative experiences for a range of use cases. The assistant can manage large contextual documents, run code, and provide contextually relevant responses that are gathered from the internet and supports 20 languages.

- In December 2023, Meta disclosed its plan to roll out multimodal AI features that gather ambient data using the cameras and microphones on the company's smart glasses. Saying "Hey Meta" to a virtual assistant that can see and hear what's going on in their immediate surroundings allows users to utilize the Ray-Ban smart glasses.

- Report ID: 6472

- Published Date: Sep 18, 2025

- Report Format: PDF, PPT

- Explore a preview of key market trends and insights

- Review sample data tables and segment breakdowns

- Experience the quality of our visual data representations

- Evaluate our report structure and research methodology

- Get a glimpse of competitive landscape analysis

- Understand how regional forecasts are presented

- Assess the depth of company profiling and benchmarking

- Preview how actionable insights can support your strategy

Explore real data and analysis

Frequently Asked Questions (FAQ)

Multimodal AI Market Report Scope

Free Sample includes current and historical market size, growth trends, regional charts & tables, company profiles, segment-wise forecasts, and more.

Connect with our Expert

Copyright @ 2026 Research Nester. All Rights Reserved.