Multimodal AI Market Outlook:

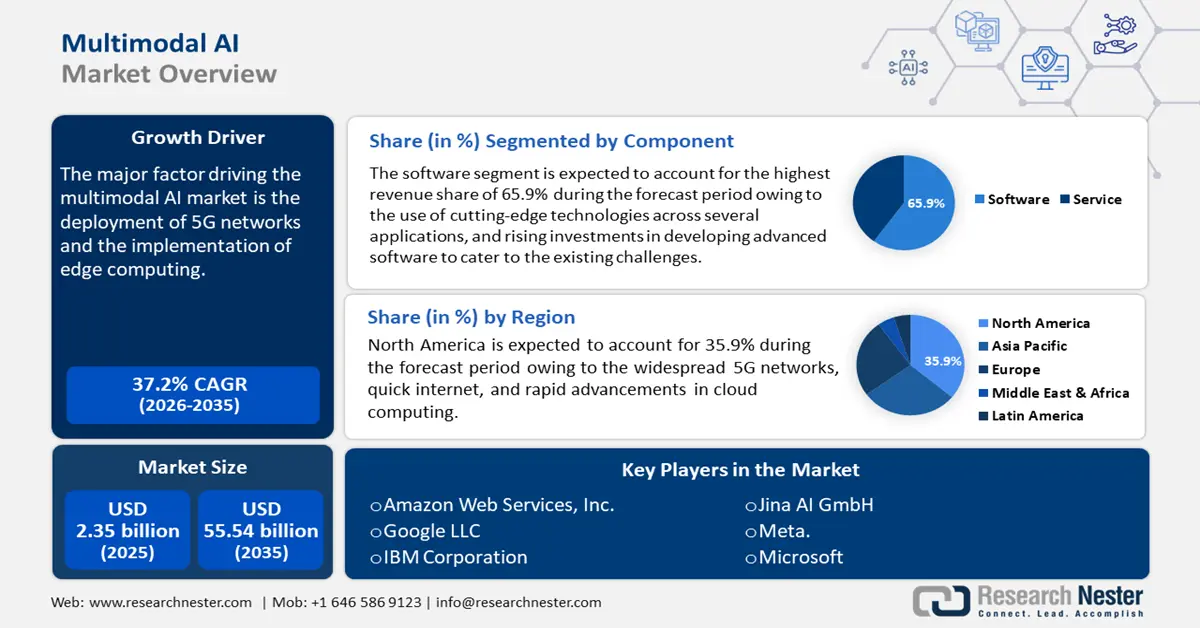

Multimodal AI Market size was over USD 2.35 billion in 2025 and is poised to exceed USD 55.54 billion by 2035, growing at over 37.2% CAGR during the forecast period i.e., between 2026-2035. In the year 2026, the industry size of multimodal AI is estimated at USD 3.14 billion.

The major factor driving the multimodal AI market is the deployment of 5G networks and the implementation of edge computing across several sectors. Edge computing reduces latency and bandwidth consumption for real-time multimodal AI applications by processing data closer to the source. This is particularly useful for Internet of Things (IoT) devices and smart systems, as they require quick data processing to function properly. The introduction of 5G has enhanced network capabilities, providing the dependability and speed needed to handle large volumes of multimodal data. For instance, Datasea, Inc.’s Chinese subsidiaries, Shuhai Information Technology Co., Ltd and Guozhong Times Technology Co., Ltd. signed a bond with Qingdao Ruizhi Yixing Information Technology Co., Ltd. to supply Qingdao with a new of range of advanced 5G-AI multimodal services.

The rise of multimodal AI can be attributed to the advancements in human-machine interface, which give consumers more intuitive and natural ways to engage with technology. Speech, writing, gestures, and visual signals are just a few of the inputs that multimodal AI combines to improve understanding and response to human commands. Experiences have become smoother and more immersive across various applications due to this advancement. In March 2024, Apple announced the launched its first customized multimodal AI model, MM1, capable of revolutionizing Siri and iMessage by analyzing texts and images contextually. The in-context learning enables the model to generate descriptions of images and answers about the content of photo-based prompts based on content it hasn’t seen before.