Content Moderation Services Market Outlook:

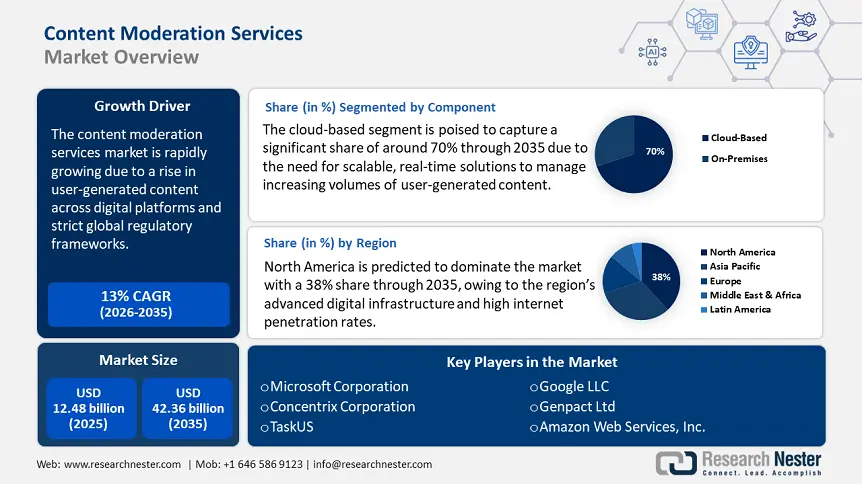

Content Moderation Services Market size was valued at USD 12.48 billion in 2025 and is expected to reach USD 42.36 billion by 2035, registering around 13% CAGR during the forecast period i.e., between 2026-2035. In the year 2026, the industry size of content moderation services is evaluated at USD 13.94 billion.

The market is primarily driven by a rise in user-generated content across digital platforms. The exponential rise of social media platforms and interactive digital forums has resulted in an unexpected volume of user-generated content. Platforms such as TikTok, Reddit, YouTube, and Meta properties are witnessing billions of uploads and interactions daily. In India, Meta has removed over 21 million pieces of bad content from Facebook and Instagram in May 2024. Specifically, they took action against 15.6 million pieces of content on Facebook and over 5.8 million pieces of content on Instagram. These removals were made because the content violated Meta's policies. This action is part of Meta's ongoing efforts to ensure compliance with the IT (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021.

To manage this scale while preserving user trust and regulatory compliance, companies are increasingly investing in scalable, AI-augmented content moderation solutions. For instance, in November 2024, Reddit significantly expanded its presence in international markets, particularly in India and Latin America, driving a surge in multilingual user-generated content. With this growth, Reddit scaled up its content moderation infrastructure by partnering with third-party moderation firms and improved its AI-driven flagging systems to manage real-time discussions across new cultural contexts.

Key Content Moderation Services Market Insights Summary:

Regional Highlights:

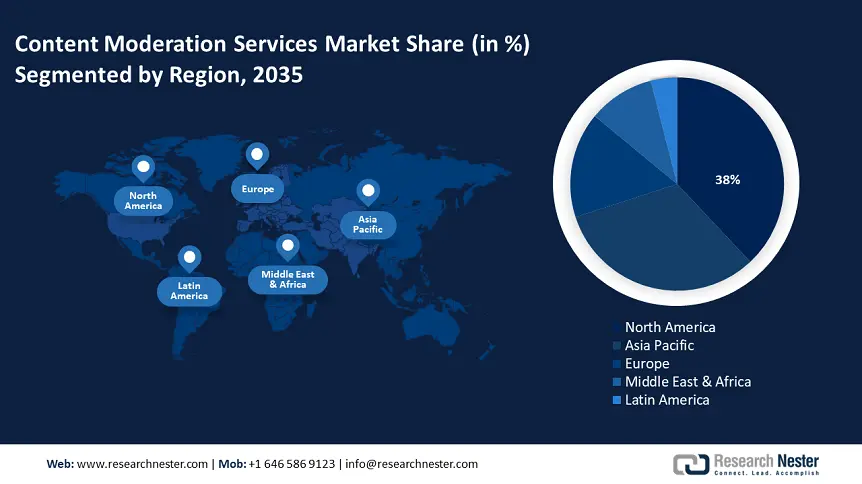

- North America dominates the Content Moderation Services Market with a 38% share, driven by the region’s advanced digital infrastructure and high internet penetration rates, supporting sustained growth through 2035.

Segment Insights:

- The Cloud-Based Deployment Mode segment is forecasted to capture 70% market share by 2035, propelled by the need for scalable, real-time solutions to manage increasing content volumes.

- The text segment is projected to hold a 45% share by 2035, driven by the surge in user-generated content across digital platforms requiring robust moderation.

Key Growth Trends:

- Rising strict global regulatory frameworks

- Brand safety and consumer trust imperatives

Major Challenges:

- Accuracy vs. scale in AI moderation systems

- Mental health impact on human moderators

- Key Players: Microsoft Corporation, Cognizant Technology Solutions Corporation, Concentrix Corporation, Genpact Ltd., Teleperformance SE, TaskUs.

Global Content Moderation Services Market Forecast and Regional Outlook:

Market Size & Growth Projections:

- 2025 Market Size: USD 12.48 billion

- 2026 Market Size: USD 13.94 billion

- Projected Market Size: USD 42.36 billion by 2035

- Growth Forecasts: 13% CAGR (2026-2035)

Key Regional Dynamics:

- Largest Region: North America (38% Share by 2035)

- Fastest Growing Region: North America

- Dominating Countries: United States, China, United Kingdom, Germany, Japan

- Emerging Countries: China, India, Brazil, Russia, Mexico

Last updated on : 12 August, 2025

Content Moderation Services Market Growth Drivers and Challenges:

Growth Drivers

- Rising strict global regulatory frameworks: Governments are introducing stricter regulations for digital safety, the spread of misinformation, child protection, and data privacy. The notable frameworks working on this include the Digital Services Act (EU), Children’s Online Privacy Protection Act (COPPA-US), Online Safety Act (UK), and the IT Rules (India). These laws mandate that platforms implement proactive moderation mechanisms to detect and mitigate harmful content, driving demand for specialized moderation service providers. For instance, in February 2024, under the DSA, platforms such as Meta, TikTok, and X were mandated to publish transparency reports and prove that harmful content is removed swiftly and systematically.

- Brand safety and consumer trust imperatives: As brands become increasingly tied to digital interactions, businesses are investing in moderation to prevent association with offensive or controversial content. For instance, several global brands, including Unilever and Nestle, temporarily pulled advertising from YouTube after their ads were found to be running alongside conspiracy theory videos. In response, YouTube upgraded its content moderation algorithms and launched a new initiative allowing advertisers to apply stricter content filters. In sectors such as advertising, e-commerce, and influencer marketing, unmoderated content can rapidly hamper customer trust and invite reputational damage. Thus, content moderation is now tightly linked to brand safety as it is a critical investment for companies with strong digital customer engagement. Vendors offering proactive moderation and real-time threat detection are gaining competitive traction.

- Advancements in AI and ML based moderation tools: The evolution of AI technologies, especially Natural Language Processing (NLP), computer vision, and context-aware sentiment analysis, has significantly improved the accuracy, speed, and scalability of automated moderation systems. This has enabled platforms to moderate large-scale content with greater efficiency and contextual understanding, reducing the burden on human moderators. Tech-driven moderation startups with proprietary AI models are drawing strong investor interest. Strategic acquisitions and partnerships are also rising, as large players seek to integrate these capabilities to enhance service agility and cost effectiveness.

Challenges

- Accuracy vs. scale in AI moderation systems: While AI and machine learning tools have greatly improved the scalability of content moderation, they still struggle to interpret contextual understanding, cultural nuance, sarcasm, and multilingual content accurately. These limitations often result in false positives, where legitimate content is wrongly flagged, and false negatives remain persistent issues in sensitive sectors such as health, politics, and social activism. The real challenge lies in achieving the right balance between speed and precision, without becoming overly dependent on human moderators.

- Mental health impact on human moderators: Human moderators, often essential for reviewing edge cases and live content, are frequently exposed to disturbing or violent material. This has led to rising concerns about occupational trauma, burnout, and legal liabilities, with several high-profile lawsuits drawing attention to the issue. Thus, ensuring ethical working conditions and psychological support for moderators adds operational costs and complexity to service delivery.

Content Moderation Services Market Size and Forecast:

| Report Attribute | Details |

|---|---|

|

Base Year |

2025 |

|

Forecast Period |

2026-2035 |

|

CAGR |

13% |

|

Base Year Market Size (2025) |

USD 12.48 billion |

|

Forecast Year Market Size (2035) |

USD 42.36 billion |

|

Regional Scope |

|

Content Moderation Services Market Segmentation:

Content Type (Text, Image, Video)

The text segment in content moderation services market is expected to hold the highest share of 45% by 2035 due to the increasing user-generated content across digital platforms. The proliferation of social media, e-commerce, and online forums makes robust moderation solutions highly necessary to manage and filter vast amounts of textual data effectively. Advancements in artificial intelligence and machine learning technologies have enhanced the efficiency and accuracy of text moderation tools, which enable real-time analysis and identification of harmful or inappropriate content.

A recent example highlighting the importance of text content moderation is the French Open’s implementation of AI-powered tools by the French company Bodyguard in 2024. These tools were employed to filter online hate targeting athletes on X, Instagram, Facebook, and TikTok platforms. Bodyguard’s technology successfully removed abusive messages in milliseconds across 45 languages, ensuring they were only visible to the sender. This initiative aimed to protect athletes’ mental health and optimize their performance, demonstrating the critical role of text content moderation in maintaining a safe online environment.

Deployment Mode (Cloud-Based, On-Premise)

The cloud-based segment in content moderation services market is poised to capture a significant share of around 70% through 2035 due to the need for scalable, real-time solutions to manage increasing volumes of user-generated content. Cloud deployment offers flexibility and rapid integration, enabling businesses to adapt swiftly to evolving content moderation requirements. Major cloud service providers such as Microsoft Azure, Amazon Web Services (AWS), and Google Cloud offer content moderation tools that utilize artificial intelligence and machine learning to enhance accuracy and efficiency. For instance, Microsoft Azure’s content moderator provides machine-assisted content moderation of text and images, allowing businesses to detect potential offensive material effectively.

Our in-depth analysis of the global content moderation services market includes the following segments:

|

Content Type |

|

|

Deployment Mode |

|

|

Component |

|

|

Organization Size |

|

|

Industry Vertical |

|

Vishnu Nair

Head - Global Business DevelopmentCustomize this report to your requirements — connect with our consultant for personalized insights and options.

Content Moderation Services Market Regional Analysis:

North America Market Analysis

North America content moderation services market is predicted to dominate the market with a 38% share through 2035, owing to the region’s advanced digital infrastructure and high internet penetration rates. The U.S. and Canada are the primary contributors to this expansion, with a significant focus on online safety and brand integrity. The increasing volume of user-generated content across various platforms necessitates sophisticated moderation solutions to ensure compliance with community standards and legal requirements. The demand is further amplified by the presence of leading technology companies in the region, which are investing heavily in content moderation services to enhance user experience and trust.

In the U.S., the content moderation landscape is highly influenced by regulatory frameworks such as Section 230 of the Communications Decency Act, which provides platforms protection for user-generated content while allowing them to set their own moderation policies. However, recent legislative developments, such as California’s AB 587, mandate social media companies to disclose their content moderation practices, particularly concerning hate speech, extremism, and misinformation. These regulations compel platforms to adopt more transparent and robust moderation strategies, thereby driving the growth of content moderation services in the country.

The content moderation services market in Canada is experiencing significant growth, propelled by the government’s proactive stance on online safety. The introduction of the proposed Online Harms Act in February 2024 mandates social media platforms to remove harmful content, such as child sexual exploitation and hate speech, within 24 hours. This legislative move highlights Canada’s commitment to fostering a safer digital environment, further increasing the demand for robust content moderation solutions. Moreover, Canada’s high internet penetration rate and active social media user base contributes to the escalating volume of online content, further emphasizing the importance of efficient content moderation services.

Asia Pacific Market Analysis

Asia Pacific is anticipated to garner a significant share from 2025 to 2035 due to the region’s rapid digital transformation and diverse linguistic and cultural landscape, which necessitates tailored modern strategies. Top countries in the region are witnessing a surge in user generated content across various platforms, driven by increasing internet penetration and smartphone adoption. This growth compels platforms to invest in advanced moderation tools to manage content effectively and ensure compliance with local regulations.

China’s content moderation services market is expanding due to stringent regulatory frameworks and the proliferation of digital platforms. The government’s emphasis on controlling online content, including the implementation of AI-generated content labeling, imposes robust moderation solutions. Additionally, the rise of short-form video platforms and the need to manage content in various dialects contribute to the growing demand for specialized moderation services. These factors collectively drive the market’s growth, ensuring compliance and maintaining the integrity of China’s digital ecosystem.

The content moderation services market in South Korea is experiencing significant growth due to the government’s stringent measures against digital sex crimes, particularly deepfake pornography. In 2024, the government implemented laws criminalizing the possession and viewing of deepfake porn, with violators facing up to three years in prison or fines up to 30 million won. This legislative action has increased the demand for advanced content moderation solutions to detect and prevent the distribution of such harmful content.

Key Content Moderation Services Market Players:

- Amazon Web Services, Inc.

- Company Overview

- Business Strategy

- Key Product Offerings

- Financial Performance

- Key Performance Indicators

- Risk Analysis

- Recent Development

- Regional Presence

- SWOT Analysis

- Microsoft Corporation

- Cognizant Technology Solutions Corporation

- Concentrix Corporation

- Genpact Ltd.

- Teleperformance SE

- TaskUs

- Cogito Tech

- iMerit

- WebFurther, LLC

The top companies leading the market include Microsoft, Google, and Amazon Web Services, which offer scalable AI-driven tools. Additionally, specialized firms such as TaskUs and Teleperformance also dominate with robust human-in-the-loop moderation models. Here are some leading players in the content moderation services market:

Recent Developments

- In June 2024, Gcore, the global AI, cloud, and security company, launched Gcore AI Content Moderation. This new service helps online providers automatically review audio, text, and video content without needing prior AI or machine learning experience. It helps organizations improve user safety and meet rules like the EU’s Digital Services Act (DSA) and the UK’s Online Safety Bill (OSB).

- In March 2023, iMerit, a leading AI data solutions company, announced a new tool designed to help video game makers build better AI-powered content moderation and community management systems. iMerit’s solution helps studios strengthen their gaming communities by using advanced automated speech recognition (ASR) to improve player safety and experience.

- Report ID: 7630

- Published Date: Aug 12, 2025

- Report Format: PDF, PPT

- Explore a preview of key market trends and insights

- Review sample data tables and segment breakdowns

- Experience the quality of our visual data representations

- Evaluate our report structure and research methodology

- Get a glimpse of competitive landscape analysis

- Understand how regional forecasts are presented

- Assess the depth of company profiling and benchmarking

- Preview how actionable insights can support your strategy

Explore real data and analysis

Frequently Asked Questions (FAQ)

Content Moderation Services Market Report Scope

Free Sample includes current and historical market size, growth trends, regional charts & tables, company profiles, segment-wise forecasts, and more.

Connect with our Expert

Copyright @ 2026 Research Nester. All Rights Reserved.