Posted Date : 15 September 2025

Posted by : Sanya Mehra

Autonomous vehicles no longer exist as a futuristic concept, instead, they have emerged as a realistic insight, highly driven by innovative artificial intelligence (AI) and progressive sensor systems. Both these components work together to ensure efficient navigation and safety. AI has become the backbone of autonomous vehicles by facilitating sensing and perception. AI processes sensor data to distinguish and classify objects, such as obstacles, other vehicles, and pedestrians, guaranteeing harmless navigation.

The aspect of real-time decision-making readily leverages computational resources within every vehicle that optimize issues based on vehicle mobility. In this regard, the Science and Information Organization, in its 2024 report, stated that this activity enhances reaction time by approximately 34% in comparison to other techniques. In addition, the prediction accuracy is 97.8%, which leads to effective time reduction. Besides, autonomous vehicles are rapidly growing in complexity, and further integrating cloud-specific reinforcement learning (RL) models, which is poised to be a promising resolution for boosting decision-making competencies.

What Is The Role of Artificial Intelligence in Autonomous Vehicles?

AI is the ultimate brain behind autonomous vehicles, which enables them to act, decide, and perceive similarly to human drivers. The transition towards automation provides increased convenience, diminished human risks, and offers avenues for significant environmental and economic benefits. As stated in the December 2024 NLM article, Tesla’s Autopilot has been connected with 17 mortalities and 736 clatters in previous years. Such organizational data has highlighted the imperative demand for sturdiness in autonomous vehicles. This results in consistent operations across varied real-world circumstances, including fluctuating weather conditions, complicated traffic patterns, and unexpected road actions.

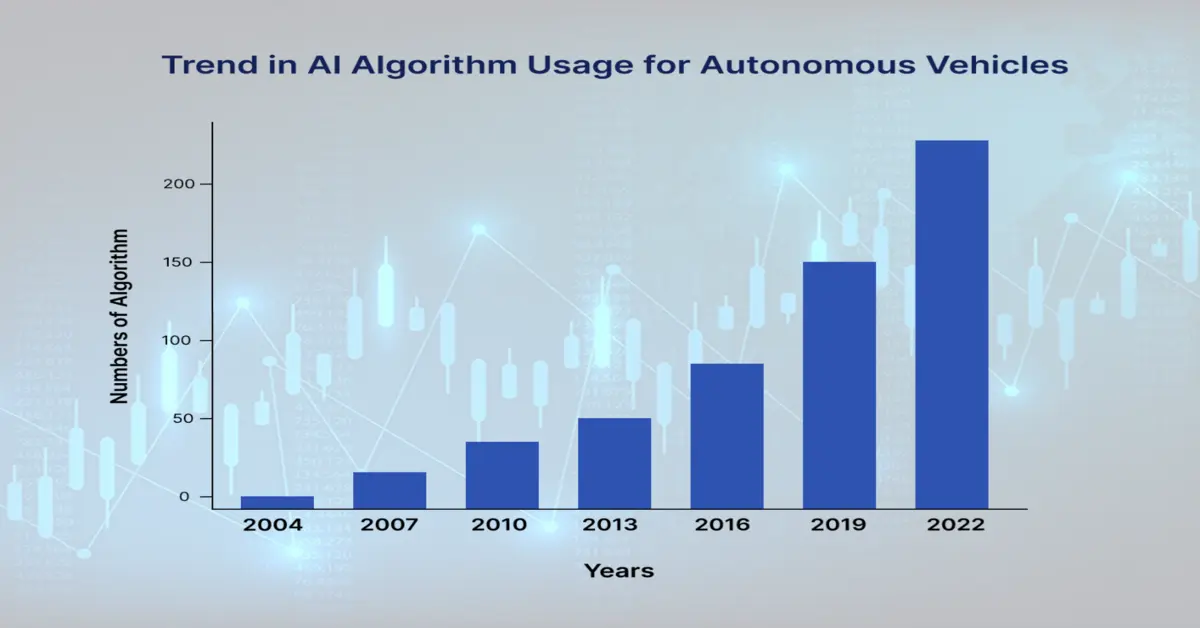

Source: MDPI

Machine Learning in Autonomous Vehicles

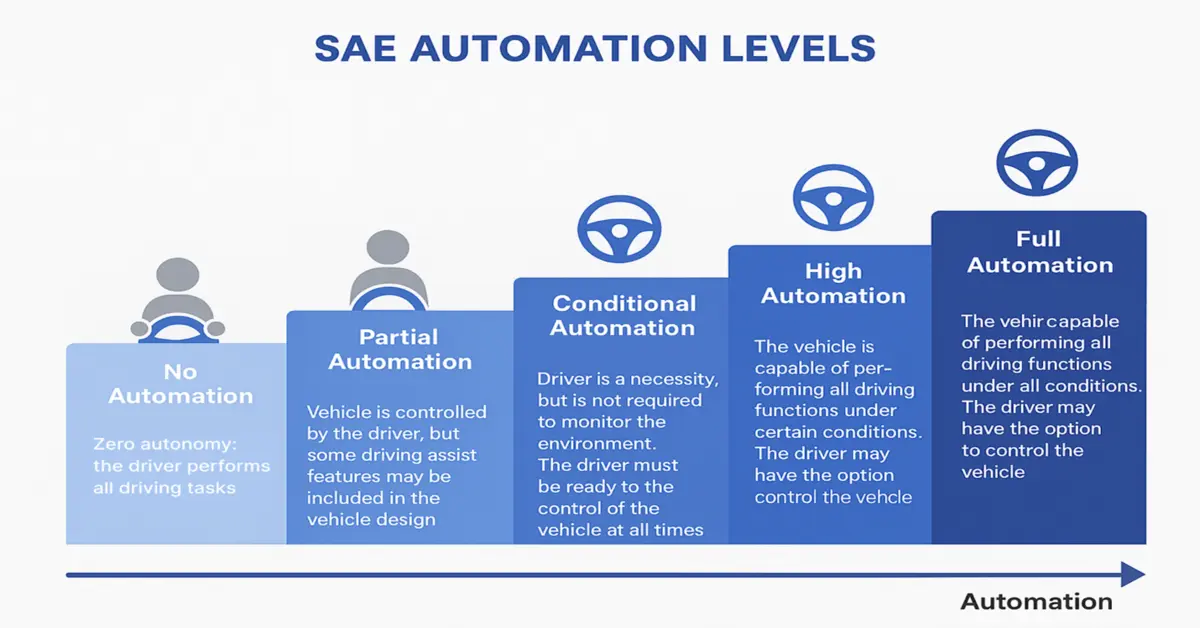

Machine learning (ML) is one of the automation technologies that assist in designing computer programs. This makes it possible to perform and complete specific activities or tasks without the need for human interference, but with the support of data and devices over a period of time. As per an article published by Machine Learning with Applications in December 2021, an estimated 94% of road accidents take place, owing to fault among drivers, including distractions and unsuitable maneuvers. Besides, non-automotive organizations and car manufacturers are developing vehicles based on different automation levels, as explained by the Society for Automobile Engineers (SAE) (see Figure 1).

Source: Science Direct

Computer Vision for Object Recognition

The implementation of AI devices possesses the ability to recognize road signs, vehicles, and pedestrians by deploying deep learning models, including Convolutional Neural Networks (CNNs). These models further assist in evaluating videos and images from mounted cameras on vehicles. This is where autonomous vehicles come into action with the ability to ensure haze removal, along with robust traffic sign detection and recognition (TSDR). As stated in the October 2021 MDPI article, the occurrence of fatalities has reached the 1.35 million mark, and an estimated 20 to 50 million people are injured every year, thus paving the way for adopting autonomous vehicles.

Path Planning and Decision-Making

These are essential AI functionalities that permit autonomous vehicles to efficiently and safely navigate on roads. Besides, AI readily analyzes real-time sensor-based data by utilizing progressive algorithms to forecast the ideal route while vigorously evading obstacles, including road hazards, vehicles, and pedestrians. Also, innovative techniques such as Model Predictive Control (MPC) offer level acceleration, lane modifications, and braking. In addition, interactive cloning allows AI to gain knowledge from human driving patterns and successfully mimic expert decisions in complicated situations.

Critical Sensors Powering Self-Driving Cars

Autonomous vehicles increasingly depend on a collection of unconventional sensors to observe their environment, each playing an exclusive role in aiding efficient and safe self-driving abilities. These sensors operate in tandem to develop a wide-ranging understanding of the vehicle’s background, confirming real-time decision-making. Below are a few key sensors, along with their functions, advantages, and limitations.

- LiDAR (Light Detection and Ranging): It utilizes optical laser pulses to produce high-resolution 3D maps of a vehicle, which allows detailed object identification, obstacles, and road curvature. Its centimeter-level accuracy makes it essential for thorough environmental mapping. According to the January 2023 McKinsey report, the price of autonomous vehicles with lidar-based Level 2+ capabilities ranges between USD 1,500 to USD 2,000, making them expensive. However, the LiDAR market continues to grow with a valuation of USD 3.3 billion in 2025, as per a report by Research Nester.

- Radar (Radio Detection and Ranging): This outshines in identifying distance and speed of objects by implementing radio waves, thus making it essential for collision avoidance and adaptive cruise control. In comparison to camera and LiDAR, radar effectively performs in poor visibility circumstances, including dust, rain, and fog, owing to its long wavelength. Besides, advanced 4D radar systems, such as Arbe Robotics, focus on enhancing resolution by elevating data and boosting object classification.

- Cameras (Computer Vision-Based Perception): These provide magnified visual data, crucial for detecting pedestrians, lane markings, and traffic signs through convolutional neural networks (CNNs). For instance, the Full Self-Driving (FSD) system of Tesla is heavily dependent on 8 surrounding cameras to get a 360-degree perception. Besides, as per a study conducted by the IEEE in 2023, severe weather, such as increased rain, diminishes camera-specific object identification accuracy by almost 40% at 80 mm/h, thereby highlighting the demand for sensor fusion for autonomous vehicles.

- Ultrasonic Sensors: These use sound waves for short-range detection capabilities for up to 5 meters, which makes them suitable for low-speed maneuvers as well as parking assistance. These are extremely cost-effective and constitute standard performance in every weather condition. But these sensors do comprise a restricted range that limits their supplementary roles. Besides, as per a report published by Bosch in 2024, ultrasonic sensors are installed in more than 80% of the latest ADAS-equipped vehicles, thus displaying their wide-ranging adoption for proximal sensing.

How AI Processes Sensor Data in Real-Time?

Autonomous vehicles depend on artificial intelligence to understand and act accordingly on sensor data within milliseconds, more rapidly than human drivers. By combining efforts from numerous sensors and integrating cutting-edge algorithms, AI has ensured precise and real-time decision-making, pivotal for safety.

Sensor Fusion: Combining Multiple Data Streams

Single sensor, in general, does not provide broad environmental awareness, so AI mixes data from ultrasonics, cameras, radar, and LiDAR to combat separate limitations. For instance, while LiDAR provides detailed 3D mapping, and cameras offer circumstantial details, including traffic light colors. Besides, as per an article published by the U.S. Department of Transportation in 2022, sensor fusion can reduce object misclassification by almost 60% in comparison to single-sensor systems.

AI’s Response Time versus Human Reaction Time

According to the 2021 NHTSA report, AI effectively processes data and caters to hazards in 100 to 200 milliseconds, and meanwhile human drivers average 1.5 seconds in case of emergencies. Besides, as per a study published by the IIHS in June 2020, it has been demonstrated that autonomous vehicles, which are equipped with sensor fusion, can overcome approximately 34% of collisions and over 5,000 crashes caused by deferred human reactions. In addition, the National Science Foundation in 2023 noted that innovation in edge computing currently enables autonomous vehicles to rapidly undertake decisions than earlier models, thereby denoting a significant collision avoidance enhancement.

The Future of AI and Sensor Technology in Autonomous Vehicles

The upcoming decade will observe transformative progression in the autonomous vehicle technology, highly attributed to revolutions through sensor and AI systems. In this regard, two crucial developments will readily modify the evolution:

- The Role of 5G & V2X (Vehicle-to-Everything): V2X communication and 5G networks are poised to provide real-time data exchange between road infrastructure, traffic signals, and vehicles to diminish dependency on onboard sensors. The U.S. Department of Transportation in April 2023 estimated that V2X can overcome almost 615,000 crashes every year by the end of 2030. Besides, in 2022, the 5G Automotive Association reported that there has been latency below 1 millisecond, which has permitted immediate risk alert, which is further severe for high-speed autonomy.

- Arrival of Level 5 Autonomy: At present, Cruise and Waymo deliberately operate based on Level 4 prototypes, due to which the majority of experts are predicting widespread Level 5 implementation within 2030 and 2035. Besides, as per the February 2025 AAA report, almost 13% of drivers in the U.S. prefer self-driving vehicles, with an increase from 9% in previous years. However, as stated in the August 2023 McKinsey report, 95% of the newest vehicles will be commercialized by 2030, out of which 12% will be equipped with Level 3 or Level 4 autonomous vehicles.

The Road Ahead for AI-Driven Autonomous Vehicles

Autonomous vehicles signify an extraordinary synthesis of AI intelligence and sensor accuracy, particularly operating in harmony to distinguish, choose, and direct the road. Yet, despite speedy progress, achieving self-driving technology remains a continuing journey. This comprises challenges, including public skepticism persistence, regulatory hurdles, and edge-case scenarios. While AI has the potential for harmless and more well-organized roads, the evolution to overall autonomy further demands ongoing revolution and societal variation. As we stand at the junction of this transport uprising, one question remains: Would you hope for a car that drives itself, or do you still favor human control? The answer may shape the future of mobility.

Contact Us